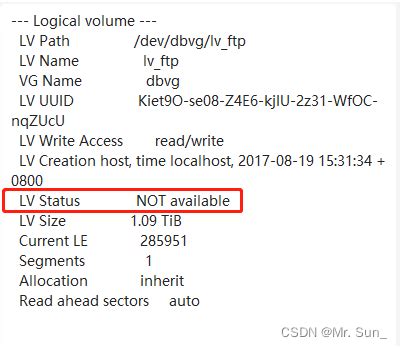

lv status not available in linux | lvscan inactive how to activate lv status not available in linux When I call vgchange -a y you can see in the journal pluto lvm [972]: Target (null) is not snapshot. After a long time the command end and the lvm are available. device-mapper: reload ioctl on . E-doma.lv. Kontaktinformācija. Telefons: Biroja adrese: Informācija par piegādi. Nav informācijas: Par šo veikalu informācija nav pieejama: Visi Latvijas interneta veikali. par KurPirkt.lv; Privātuma politika; Informācija veikaliem; .In decompensated advanced systolic heart failure, E/e' showed a poor correlation with intracardiac filling pressures, especially in large LV volumes, worse cardiac indices, and in the presence of cardiac resynchronization therapy.

0 · red hat Lv status not working

1 · red hat Lv status not found

2 · lvscan inactive how to activate

3 · lvm subsystem not showing volume

4 · lvm subsystem not detected

5 · lvm Lv status not available

6 · lvdisplay not available

7 · dracut lvm command not found

The Easky 15 is a kayak brought to you by Venture Kayaks. Read Easky 15 reviews or submit your own review to share with the paddling community. Check out a few other kayak recommendations below or explore all kayaks to .

Activate the lv with lvchange -ay command. Once activated, the LV will show as available. # lvchange -ay /dev/testvg/mylv Root Cause. When a logical volume is not active, it will show as NOT available in lvdisplay. Diagnostic Steps. Check the output of the lvs command and see .When I call vgchange -a y you can see in the journal pluto lvm [972]: Target (null) is not snapshot. After a long time the command end and the lvm are available. device-mapper: reload ioctl on . The problem is that after a reboot, none of my logical volumes remains active. The 'lvdisplay' command shows their status as "not available". I can manually issue an "lvchange .I just converted by lvm2 root filesystem from linear lvm2 (single HDD:sda) to lvm2 raid1 (using lvconvert -m1 --type raid1 /dev/ubuntu/root /dev/sdb5 command). But after this conversion I .

After a reboot the logical volumes come up with a status "NOT Available", and fail to be mounted as part of the boot process. After the boot process, I'm able to "lvchange -ay ." to make the .sys_exit_group. system_call_fastpath. I added rdshell to my kernel params and rebooted again. After the same error, the boot sequence dropped into rdshell. at the shell, I ran lvm lvdisplay, .

LV: home_athena (on top of thin pool) LUKS encrypted file system. During boot, I can see the following messages: Jun 02 22:59:44 kronos lvm[2130]: pvscan[2130] PV .

On every reboot logical volume swap and drbd isn't activated. I need to use vgchange -ay command to activate them by hand. Only root logical volume is available, on this .Activate the lv with lvchange -ay command. Once activated, the LV will show as available. # lvchange -ay /dev/testvg/mylv Root Cause. When a logical volume is not active, it will show as NOT available in lvdisplay. Diagnostic Steps. Check the output of the lvs command and see whether the lv is active or not.You may need to call pvscan, vgscan or lvscan manually. Or you may need to call vgimport vg00 to tell the lvm subsystem to start using vg00, followed by vgchange -ay vg00 to activate it. Possibly you should do the reverse, i.e., vgchange -an .When I call vgchange -a y you can see in the journal pluto lvm [972]: Target (null) is not snapshot. After a long time the command end and the lvm are available. device-mapper: reload ioctl on (253:7) failed: Invalid argument. 2 logical volume(s) in volume group "data-vg" now active.

The problem is that after a reboot, none of my logical volumes remains active. The 'lvdisplay' command shows their status as "not available". I can manually issue an "lvchange -a y /dev/" and they're back, but I need them to automatically come up with the server. LV Status: The current status of the logical volume. The active logical volume has the status available and the inactive logical volume has the status unavailable . open: Number of files that are open on the logical volume.The machine now halts during boot because it can't find certain logical volumes in /mnt. When this happens, I hit "m" to drop down to a root shell, and I see the following (forgive me for inaccuracies, I'm recreating this): $ lvs.I just converted by lvm2 root filesystem from linear lvm2 (single HDD:sda) to lvm2 raid1 (using lvconvert -m1 --type raid1 /dev/ubuntu/root /dev/sdb5 command). But after this conversion I can't boot my ubuntu 12.10 (kernel 3.5.0-17-generic).

I was using a setup using FCP-disks -> Multipath -> LVM not being mounted anymore after an upgrade from 18.04 to 20.04. I was seeing these errors at boot - I thought that is ok to sort out duplica.

After a reboot the logical volumes come up with a status "NOT Available", and fail to be mounted as part of the boot process. After the boot process, I'm able to "lvchange -ay ." to make the logical volumes "available" then mount them.sys_exit_group. system_call_fastpath. I added rdshell to my kernel params and rebooted again. After the same error, the boot sequence dropped into rdshell. at the shell, I ran lvm lvdisplay, and it found the volumes, but they were marked as LV Status NOT available. dracut:/#lvm lvdisplay.Activate the lv with lvchange -ay command. Once activated, the LV will show as available. # lvchange -ay /dev/testvg/mylv Root Cause. When a logical volume is not active, it will show as NOT available in lvdisplay. Diagnostic Steps. Check the output of the lvs command and see whether the lv is active or not.You may need to call pvscan, vgscan or lvscan manually. Or you may need to call vgimport vg00 to tell the lvm subsystem to start using vg00, followed by vgchange -ay vg00 to activate it. Possibly you should do the reverse, i.e., vgchange -an .

When I call vgchange -a y you can see in the journal pluto lvm [972]: Target (null) is not snapshot. After a long time the command end and the lvm are available. device-mapper: reload ioctl on (253:7) failed: Invalid argument. 2 logical volume(s) in volume group "data-vg" now active. The problem is that after a reboot, none of my logical volumes remains active. The 'lvdisplay' command shows their status as "not available". I can manually issue an "lvchange -a y /dev/" and they're back, but I need them to automatically come up with the server. LV Status: The current status of the logical volume. The active logical volume has the status available and the inactive logical volume has the status unavailable . open: Number of files that are open on the logical volume.The machine now halts during boot because it can't find certain logical volumes in /mnt. When this happens, I hit "m" to drop down to a root shell, and I see the following (forgive me for inaccuracies, I'm recreating this): $ lvs.

I just converted by lvm2 root filesystem from linear lvm2 (single HDD:sda) to lvm2 raid1 (using lvconvert -m1 --type raid1 /dev/ubuntu/root /dev/sdb5 command). But after this conversion I can't boot my ubuntu 12.10 (kernel 3.5.0-17-generic). I was using a setup using FCP-disks -> Multipath -> LVM not being mounted anymore after an upgrade from 18.04 to 20.04. I was seeing these errors at boot - I thought that is ok to sort out duplica.

After a reboot the logical volumes come up with a status "NOT Available", and fail to be mounted as part of the boot process. After the boot process, I'm able to "lvchange -ay ." to make the logical volumes "available" then mount them.

rolex watch custom shirt

red hat Lv status not working

red hat Lv status not found

Spigen Rugged Armor. i-Blason Prime. E LV Armor Defender. OtterBox Symmetry. UAG Feather Light Composite. Why you can trust Android Central Our expert reviewers spend hours testing and.

lv status not available in linux|lvscan inactive how to activate